Data Factory in Microsoft Fabric

- ETL / ELT

- November 05, 2024

-

“Data Factory in Microsoft Fabric”

Data Factory helps easily work with different types of data from various sources like databases, data warehouses, real-time data, and more. Whether a beginner or an experienced developer, we can use smart tools to organize and change the data.

In Microsoft Fabric, Data Factory introduces Fast Copy, which swiftly moves data between different storage systems. This feature is particularly useful for quickly transferring data to your data warehouse or Lakehouse for analysis.

There are two primary high-level features Data Factory implements:

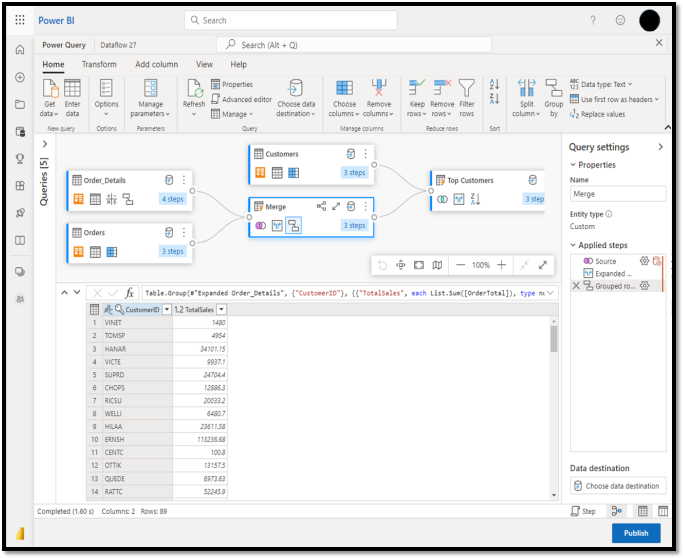

1) Dataflows :Dataflows provide a low-code interface for ingesting data from hundreds of data sources, transforming our data using 300+ data transformations. We can then load the resulting data into multiple destinations, such as Azure SQL databases and more.

- Dataflows can be run repeatedly using manual or scheduled refresh or as part of a data pipeline orchestration.

- Dataflows are built using the familiar Power Query experience that's available today across several Microsoft products and services such as Excel, Power BI, Power Platform, Dynamics 365 Insights applications, and more.

- Power Query empowers all users from citizens to professional data integrators to perform data ingestion and data transformations across their data estate.

- Perform joins, aggregations, data cleansing, custom transformations and much more all from an easy-to-use, highly visual, low-code UI.

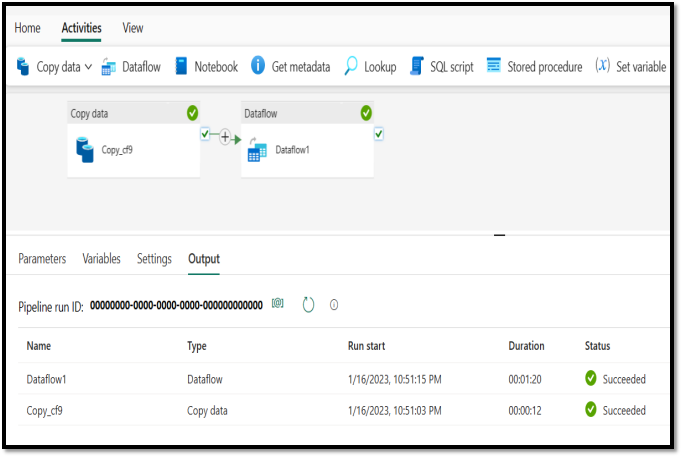

2) Data Pipelines :

Data pipelines enable powerful workflow capabilities at cloud-scale.

- With data pipelines, you can build complex workflows that can refresh your dataflow, move PB-size data and explain complicated control flow pipelines.

- Use data pipelines to build complex ETL and data factory workflows that can perform many different tasks at scale. Control flow capabilities are built into data pipelines that allow you to build workflow logic, which provides loops and conditionals.

- Add a configuration-driven copy activity together with your low-code dataflow refresh in a single pipeline for an end-to-end ETL data pipeline. We can even add code-first activities for Spark Notebooks, SQL scripts, stored procs and more.

ssssssStart Your Data Journey Today With MSAInfotech

Take the first step towards data-led growth by partnering with MSA Infotech. Whether you seek tailored solutions or expert consultation, we are here to help you harness the power of data for your business. Contact us today and let’s embark on this transformative data adventure together. Get a free consultation today!

We utilize data to transform ourselves, our clients, and the world.

Partnership with leading data platforms and certified talents