Transforming Data Management for a Retail Client with SSIS

- ETL / ELT

- November 13, 2024

-

Transforming Data Management for a Retail Client with SSIS

Introduction

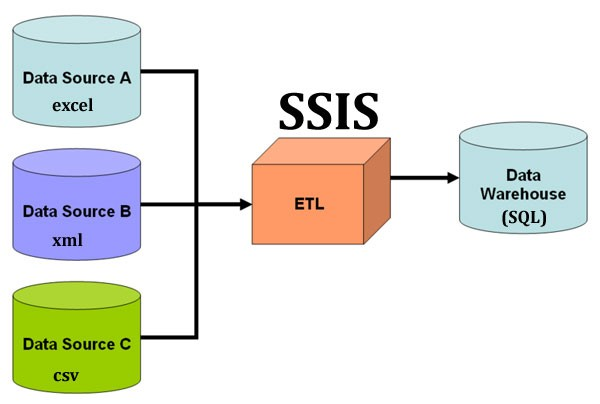

In today’s data-driven world, efficient data integration is crucial for businesses, especially in industries with high transaction volumes and multiple data sources. SQL Server Integration Services (SSIS) is a powerful tool for managing and transforming data, making it a valuable asset for organizations looking to streamline their data workflows. In this blog, we’ll explore how we used SSIS to optimize data processing for a retail client, enabling them to make timely and data-driven decisions that significantly improved their operational efficiency.

Client Overview and Requirements

Our client, a mid-sized retail chain with stores in multiple locations, faced several challenges in handling their data. Each store generated a substantial amount of transactional data daily, including sales, inventory updates, and customer feedback. The client’s key requirements included:

- Centralized Data Consolidation: The client needed to consolidate data from multiple stores into a central SQL Server database to gain a comprehensive view of their operations.

- Data Transformation and Cleansing: Due to variations in data format and quality across stores, the data required transformation and cleansing before loading into the central database.

- Scheduled ETL Process: The client wanted an automated, scheduled ETL (Extract, Transform, Load) process that would refresh their data daily without manual intervention.

- Data Quality and Error Handling: Any data errors during the ETL process needed to be handled gracefully, with error logs generated for review by the data management team.

The client had previously been manually consolidating data using Excel and other tools, which was time-consuming and prone to errors. They sought a solution that would make data consolidation seamless and reliable.

Solution: Implementing SSIS for Efficient Data Integration

To meet the client's requirements, we designed and implemented an SSIS-based ETL solution. SSIS provided a robust framework for handling data integration, transformation, and error management, allowing us to create an automated and efficient data pipeline.

Key Components of the Solution

Here's a breakdown of how we implemented the SSIS solution for the client:

1. Data Extraction from Multiple Sources

- Challenge: Each retail location had its own database, with variations in schema and format. We needed to pull data from multiple sources, standardize it, and consolidate it.

- Solution: We used SSIS to create separate Data Flow Tasks for each source. These tasks extracted data from each store’s database using SQL queries or stored procedures and brought it into SSIS’s memory for processing.

- Connection Managers: SSIS Connection Managers were configured to handle the different database connections dynamically, allowing us to pull data from each store seamlessly.

2. Data Transformation and Cleansing

- Challenge: Data needed to be transformed and standardized. For example, each store had different naming conventions for products, categories, and other attributes.

- Solution: SSIS’s Data Flow Components enabled us to clean and transform data on the fly. Here’s how we used some key transformation components:

- Derived Column Transformation: We standardized product names and categories using SSIS expressions, ensuring consistency across all stores.

- Data Conversion Transformation: We converted data types, such as dates and prices, to ensure compatibility with the central database.

- Lookup Transformation: We used the Lookup Transformation to match store-specific product codes with a master product table, ensuring consistency in reporting.

- Conditional Splits: To handle cases where data quality was low or values were missing, we implemented Conditional Split transformations to separate problematic records, which were then logged and handled separately.

3. Data Loading to the Centralized Database

- Challenge: Once transformed, the data needed to be loaded into a central SQL Server database without impacting its performance.

- Solution: We used OLE DB Destination in SSIS to load data into the central database. Key practices implemented included:

- Batch Processing: We loaded data in batches, which minimized the load on the SQL Server and ensured efficient resource usage.

- Transactional Load: We wrapped each data load into transactions, ensuring that any errors or failures would roll back the data load to maintain data integrity.

- Table Partitioning: In the SQL Server database, we partitioned large tables by date, which improved query performance and made loading faster.

4. Error Handling and Logging

- Challenge: With a high volume of data and multiple sources, errors were inevitable, and the client needed robust error handling and logging.

- Solution: SSIS offers excellent error-handling capabilities, which we used to implement detailed error logging. Our approach included:

- Error Output Paths: Each Data Flow Task had error output paths, which redirected problematic rows to an error table, allowing data to continue flowing without interruption.

- Event Handlers: SSIS’s Event Handlers captured errors at different stages and logged them in a SQL Server logging table, along with detailed error messages for troubleshooting.

- Email Notifications: Using SSIS’s Send Mail Task, we configured automated email alerts to notify the client’s data management team of critical errors, along with the affected records and error details.

5. Scheduling and Automation with SQL Server Agent

- Challenge: The client needed the ETL process to run daily without manual intervention.

- Solution: We scheduled the SSIS packages using SQL Server Agent, setting them to run at the end of each business day. The automated scheduling allowed the ETL process to run overnight, ensuring that the client’s data was ready for analysis every morning.

- Logging and Monitoring: SQL Server Agent provided detailed logs for each run, allowing the client’s IT team to monitor job performance and troubleshoot if needed.

Implementation Process

1. Initial Requirements Gathering and Planning

We began by gathering detailed requirements from the client’s data management and IT teams. This involved identifying data sources, understanding data quality issues, and mapping out the ETL process.

2. SSIS Package Development and Testing

Our development team created individual SSIS packages for each step in the ETL pipeline. We rigorously tested each package to ensure accurate data extraction, transformation, and loading, and that error-handling mechanisms worked as expected.

3. User Training and Documentation

Once the solution was tested and deployed, we conducted training sessions with the client’s team, covering package configuration, logging, and troubleshooting common issues. We also provided documentation on each package and how to monitor scheduled jobs in SQL Server Agent.

4. Ongoing Maintenance and Optimization

We continue to provide ongoing support, optimizing the SSIS packages as the client’s data volume grows and business needs evolve.

Benefits Achieved

- Centralized Data Access: The client now has a consolidated view of their data, enabling them to make informed decisions across all store locations.

- Data Quality and Consistency: With automated data transformation and cleansing, the client enjoys consistent, high-quality data across their reporting systems.

- Efficiency and Automation: The scheduled ETL process has significantly reduced manual data handling, freeing up resources for more strategic tasks.

- Error Management: The detailed logging and error-handling system ensures that the client can quickly identify and resolve data issues, maintaining high data integrity.

Conclusion

This real-world example illustrates how SSIS can transform data management for businesses, making it easier to consolidate, clean, and load data from multiple sources into a central repository. By automating the ETL process and ensuring high data quality, SSIS empowered our client to streamline their operations, make data-driven decisions, and improve efficiency across their retail chain.

If your organization is struggling with data integration challenges, SQL Server Integration Services (SSIS) might be the solution you need. This project showcases how SSIS can streamline complex ETL processes, providing businesses with clean, centralized data ready for analysis.

Start Your Data Journey Today With MSAInfotech

Take the first step towards data-led growth by partnering with MSA Infotech. Whether you seek tailored solutions or expert consultation, we are here to help you harness the power of data for your business. Contact us today and let’s embark on this transformative data adventure together. Get a free consultation today!

We utilize data to transform ourselves, our clients, and the world.

Partnership with leading data platforms and certified talents