Driving Efficiency and Insight with SSIS: A Client Success Story

- ETL / ELT

- November 08, 2024

-

Driving Efficiency and Insight with SSIS: A Client Success Story

Introduction: As businesses grow, managing data efficiently becomes both a challenge and a necessity. For organizations dealing with large volumes of data, effective ETL (Extract, Transform, Load) processes are crucial for timely, accurate insights. Recently, we helped a client streamline their data integration and reporting using SQL Server Integration Services (SSIS). This case study demonstrates how SSIS transformed data processing for our client, allowing them to make data-driven decisions faster and more accurately.

Challenge: Inconsistent and Fragmented Data Across Multiple Sources

Our client, a [specific industry, e.g., retail, healthcare, finance] company, faced a range of data-related challenges:

- Data Fragmentation: Data was spread across multiple systems, including CRM, ERP, and other custom databases, making it difficult to get a unified view of key metrics.

- Manual Data Handling: Extracting, transforming, and loading data from these sources was largely manual, leading to high labor costs, delays, and frequent errors.

- Inconsistent Reporting: Without a centralized ETL process, reports were often inconsistent and unreliable, resulting in decision-making based on inaccurate data.

- Scalability Issues: As the business expanded, their existing data workflows struggled to keep up with the increased volume, impacting operational efficiency.

These challenges affected not only their reporting and analytics but also limited their ability to gain timely insights for decision-making.

Solution: Implementing SSIS for Robust ETL and Data Integration

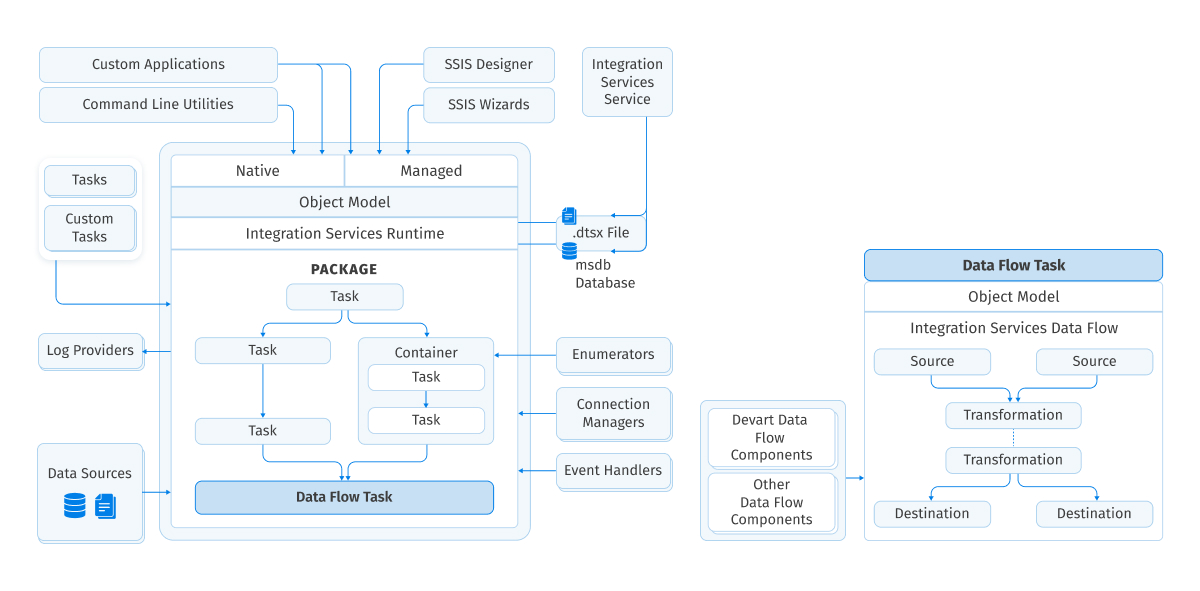

After evaluating the client’s needs, we proposed using SQL Server Integration Services (SSIS) to build a robust, automated ETL process. SSIS allowed us to design custom workflows that seamlessly pulled data from multiple sources, transformed it, and loaded it into a centralized data warehouse. Here’s how SSIS addressed the client’s specific challenges:

1. Automated Data Extraction from Multiple Sources

- Problem: Manually extracting data from multiple sources was time-consuming and error-prone.

- Solution: We configured SSIS to pull data from various sources, including SQL Server, Oracle databases, flat files, and third-party APIs. This automation allowed the client to eliminate manual data extraction, saving hours of work each week. SSIS’s versatility allowed us to connect to almost any data source, giving the client a unified view of data across systems.

2. Data Transformation for Consistency and Accuracy

- Problem: Data from different systems had inconsistent formats, making it difficult to produce accurate reports.

- Solution: Using SSIS transformations, we standardized data formats, cleaned up duplicate entries, and ensured consistency across datasets. For example, we used SSIS’s Data Flow Transformations to handle tasks such as data type conversion, splitting and merging data, and performing calculations. This resulted in clean, consistent data that improved the accuracy of reports.

3. Efficient Data Loading into a Centralized Data Warehouse

- Problem: Previously, data was loaded manually or through ad hoc processes, leading to delays and data duplication.

- Solution: We used SSIS to load data into a centralized data warehouse on a scheduled basis. By implementing incremental loading, SSIS could add only the new or updated records, reducing load times and improving efficiency. This gave the client a single, comprehensive source of truth, reducing data silos and ensuring that everyone accessed the same information.

4. Scheduling and Error Handling for Reliable Operations

- Problem: Frequent errors and lack of scheduling made it hard to maintain a smooth data pipeline.

- Solution: SSIS provided scheduling capabilities via SQL Server Agent, allowing us to automate the ETL process on a daily, weekly, or monthly basis. We also configured error handling within SSIS, including logging and alerts, so the client could quickly address any data issues. This improved the reliability of data operations, reducing downtime and minimizing manual intervention.

5. Scalability to Handle Growing Data Volumes

- Problem: The client’s previous solution wasn’t scalable, leading to slow processing as data volumes grew.

- Solution: SSIS’s high-performance data handling allowed us to process large datasets quickly and efficiently. With SSIS’s parallel processing and data partitioning, we designed workflows that scaled as data volumes increased, ensuring the client could handle growing data loads without sacrificing performance.

Outcome: Achieving Data-Driven Decision-Making with SSIS

The implementation of SSIS delivered several key benefits for our client:

- Time Savings: Automated ETL workflows saved an estimated 20+ hours per week, allowing employees to focus on analysis rather than manual data processing.

- Improved Data Accuracy: With data consistency checks and automated transformations, report accuracy increased significantly, resulting in more reliable insights for decision-making.

- Faster Report Generation: By consolidating data into a single source, the client could generate reports faster, reducing the time it took to make data-driven decisions.

- Reduced Operational Costs: Automation and efficient data loading cut down on manual labor costs and eliminated the need for temporary solutions to handle large data loads.

- Scalable Architecture: SSIS enabled the client to process higher data volumes without performance issues, ensuring that their ETL solution could grow with the business.

Technical Highlights of the SSIS Solution

Here are some key technical features that contributed to the project’s success:

- Data Flow Task Customization: We used SSIS’s data flow tasks to configure complex transformations, including lookups, conditional splits, and aggregations.

- Control Flow for Complex Workflow Management: SSIS’s control flow capabilities allowed us to sequence tasks, handle parallel processing, and manage dependencies between different ETL stages.

- Error Handling and Logging: Built-in logging in SSIS captured error details, making it easy to troubleshoot and monitor the health of the ETL processes.

- Incremental Loading Strategy: Using SSIS’s Change Data Capture (CDC) feature, we implemented an incremental loading process that loaded only new or modified records, reducing load time and improving efficiency.

- Data Cleansing and Quality Checks: We implemented data quality checks within SSIS to handle data cleansing tasks, ensuring that only clean, validated data reached the data warehouse.

Conclusion: Empowering Businesses with SSIS for ETL Automation and Data Integration

This case study demonstrates how SSIS can transform data handling for organizations dealing with fragmented and inconsistent data. With its powerful ETL capabilities, SSIS not only saved our client time and resources but also provided them with a reliable, scalable solution that enables data-driven decision-making.

If your organization is struggling with data management across multiple sources, SSIS could be the solution you need to automate workflows, improve data quality, and unlock powerful insights.

If your organization is struggling with data management across multiple sources, SSIS could be the solution you need to automate workflows, improve data quality, and unlock powerful insights.