Data Engineering Best Practices: Building Robust Pipelines for Scalable Analytics

- ETL / ELT

- November 05, 2024

-

Data Engineering Best Practices: Building Robust Pipelines for Scalable Analytics

Introduction

Data is the lifeblood of modern organizations. Whether you’re analyzing customer behavior, optimizing supply chains, or training machine learning models, robust data pipelines are essential. In this blog post, we’ll explore the key components, best practices, and real-world examples for building data pipelines that stand up to the demands of scalable analytics.

What Is a Data Pipeline?

A data pipeline is a series of processing steps that move data from its source to its destination. These steps involve transforming, cleaning, filtering, integrating, and aggregating the data. The end result is data that is ready for analysis. Each step in the pipeline depends on the successful completion of the previous one.

Data Pipeline Use Cases

Let’s start by understanding the goals achievable with robust data pipelines:

1. Data Prep for Visualization: Data pipelines facilitate easier data visualization by gathering and transforming data into a usable state. Organizations use data visualization to identify patterns, trends, and communicate findings effectively.

2. Data Integration: A data pipeline gathers data from disparate sources into a unified view. This unified data enables better comparison, contrast, and decision-making.

3. Machine Learning: Data pipelines feed necessary data into machine learning algorithms, making AI possible.

4. Data Quality: Data pipelines improve data consistency, quality, and reliability through cleaning processes. High-quality data leads to more accurate conclusions and informed business decisions.

Components of a Data Pipeline

To build robust data pipelines, let’s explore the essential components:

1. Data Sources: These are systems generating data for your business. Sources include analytics data, transactional data, and third-party data.

2. Data Collection (Ingestion): Moving data into the pipeline involves collecting data from various sources. Quality checks during ingestion are non-negotiable.

3. Data Transformation: Transforming raw data into a usable format. This step includes cleaning, aggregating, and enriching data.

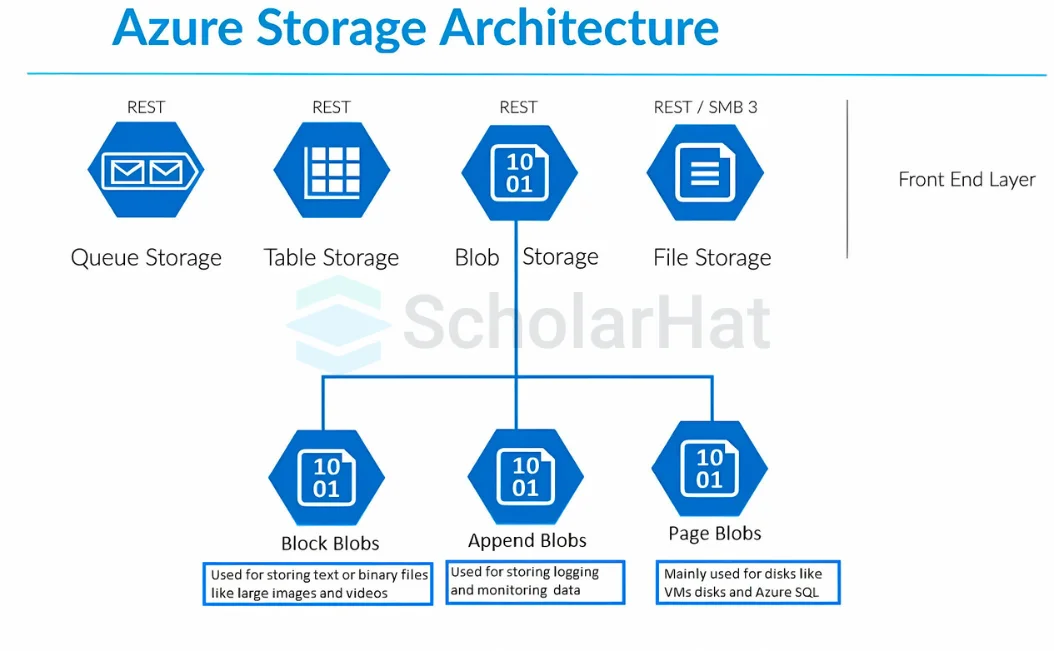

4. Data Storage: Choosing the right storage solution (databases, data lakes, warehouses) based on scalability and performance requirements.

5. Data Processing: Performing computations, joins, and calculations on the data.

6. Data Orchestration: Managing the flow of data through the pipeline, ensuring tasks execute in the correct order.

7. Monitoring and Logging: Monitoring pipeline health, detecting anomalies, and logging events for troubleshooting.

8. Error Handling and Recovery: Handling failures gracefully and ensuring data consistency.

Best Practices for Building Robust Data Pipelines

1. Set Clear Goals: Define objectives and key metrics before building the pipeline. Understand what value you aim to extract.

2. Data Quality Checks: Implement validation and quality checks at every stage. Garbage in, garbage out—ensure data integrity.

3. Scalability: Design pipelines to handle increasing data volumes. Consider horizontal scaling and cloud-based solutions.

4. Security: Protect sensitive data. Use encryption, access controls, and secure connections.

5. Maintain Data Lineage and Metadata: Document the flow of data and maintain metadata for auditing and troubleshooting.

6. Monitoring and Logging: Set up robust monitoring to detect issues early. Log relevant events for analysis.

7. Version Control and Documentation: Treat pipelines as code. Version control and document changes.

8. Error Handling and Recovery: Plan for failures. Implement retries, alerts, and recovery mechanisms.

Real-World Examples

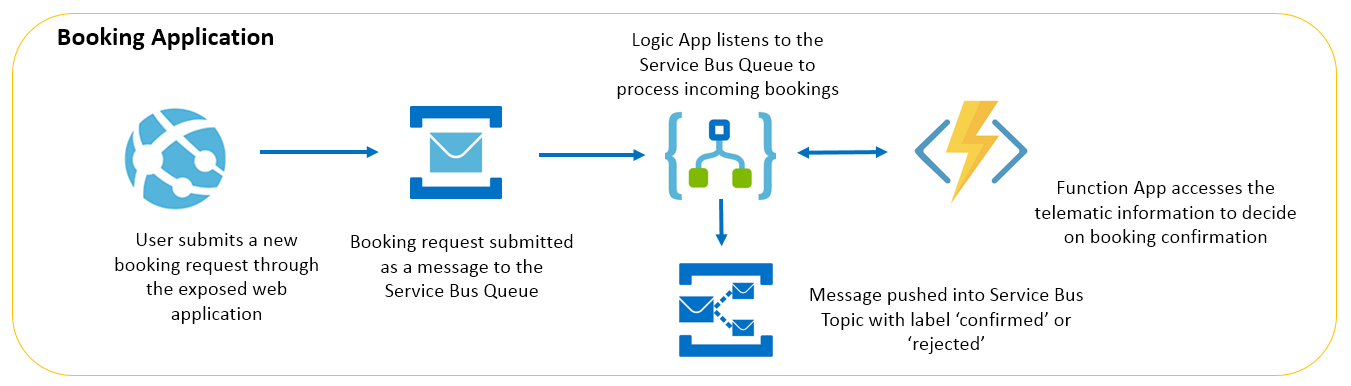

1. Streaming Data Pipeline: Using Apache Kafka and Apache Flink to process real-time data from IoT devices.

2. Batch Data Pipeline: Leveraging Apache Spark for ETL (Extract, Transform, Load) jobs on historical data.

3. Cloud-Native Pipelines: Building pipelines on AWS Glue, Google Dataflow, or Azure Data Factory.

Remember, robust data pipelines are the backbone of successful analytics. Invest time in designing, monitoring, and maintaining them to unlock the full potential of your data.

Start Your Data Journey Today With MSAInfotech

Take the first step towards data-led growth by partnering with MSA Infotech. Whether you seek tailored solutions or expert consultation, we are here to help you harness the power of data for your business. Contact us today and let’s embark on this transformative data adventure together. Get a free consultation today!

We utilize data to transform ourselves, our clients, and the world.

Partnership with leading data platforms and certified talents